ChatGPT and Confidentiality: How Safe is Your Data?

Posted on April 11, 2025 by Peter Tooke

Artificial Intelligence (AI) is arguably the most groundbreaking technological innovation of recent times. It’s having an increasingly powerful impact on all our lives and generative chatbots like ChatGPT have become indispensable tools for businesses looking to boost productivity, improve decision-making, automate tasks, reduce human error, and cut costs.

Businesses that are leveraging the benefits of AI-powered tools like ChatGPT, are gaining a clear competitive edge. But when it comes to data protection and online security, is ChatGPT confidential? Or are there things you shouldn’t disclose so that your sensitive information is protected from cybercriminals?

You’re about to find out.

Is ChatGPT confidential?

ChatGPT is not inherently confidential, as it is not designed to securely handle sensitive data such as passwords, financial details, or personally identifiable information. While OpenAI employs encryption and security protocols to protect user interactions, the free and Plus versions of ChatGPT may temporarily retain conversations to enhance the model’s responses. This creates a potential risk of unauthorised access or data breaches.

Additionally, users should be aware that while the Enterprise and Team versions of ChatGPT offer improved security measures and do not retain conversations, no AI chatbot should be considered entirely private. Any data inputted into ChatGPT could be exposed through security vulnerabilities or human error, making it critical to avoid sharing confidential or proprietary information on the platform.

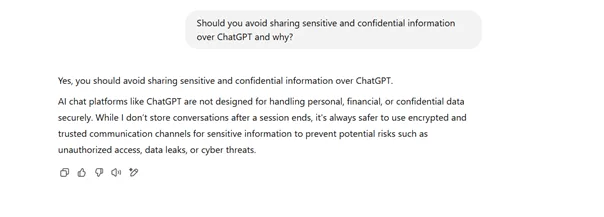

If you need convincing, ask ChatGPT yourself. We did, and this was its generated response:

Q: Should you avoid sharing sensitive and confidential information over ChatGPT?

ChatGPT: “Yes, you should avoid sharing sensitive and confidential information over ChatGPT.

AI chat platforms like ChatGPT are not designed for handling personal, financial, or confidential data securely. While I don’t store conversations after a session ends, it’s always safer to use encrypted and trusted communication channels for sensitive information to prevent potential risks such as unauthorized access, data leaks, or cyber threats.”

So, in its own generated words, treat ChatGPT as you would any digital chatbot – never share sensitive information over the platform because of the security risks and potential for data theft.

For business owners, that means trusting and training your employees not to use ChatGPT for anything confidential that could open a window of opportunity for cybercriminals looking to steal sensitive data and access your network.

With effective AI readiness training, your staff can learn how to use ChatGPT safely, unlocking its full potential while maintaining data security.

If you’re still asking; ‘Is ChatGPT confidential?’ let’s dig a little deeper and highlight the things everyone across your business should avoid doing.

Why isn’t ChatGPT confidential?

A common misconception is that ChatGPT continuously learns from all user interactions. While some versions temporarily store conversations, not all models do. ChatGPT’s Free and Plus versions do not learn from conversations in real-time. However, OpenAI may retain interactions temporarily to improve model performance, subject to its data policies. This means that while individual conversations are not actively used for continuous training, they may still contribute to broader model updates over time. Additionally, OpenAI’s ChatGPT Team and Enterprise versions do not store user conversations or use them for model training. This makes them a more secure option for businesses handling sensitive data and personal information, as they offer greater privacy controls compared to the Free and Plus versions.

While ChatGPT does not learn from individual user interactions in real-time, OpenAI continuously refines the model using broader datasets. Increased user engagement helps improve future iterations of the AI by enhancing its ability to understand language nuances, idioms, and contextual accuracy over time.

If your staff are using ChatGPT to share personal and confidential information, they are not only putting their data at risk but may inadvertently weaken your company’s cyber defences. When you share sensitive information, you lose control over who can access it. ChatGPT might not share your personal data with anyone, but there’s always a risk that a malicious actor could gain access to your account and read your conversations.

The flip side is that anything you type into ChatGPT may be used to generate responses to other queries. This includes any confidential information you may have entered, whether at home or the workplace.

Ultimately, it’s essential to weigh up these confidentiality risks when using ChatGPT. For instance, if you ask ChatGPT to write your CV, some versions may temporarily store your name, address, phone numbers, email – personal details could be stolen by cybercriminals. Similarly, the same is true of work-related information including spreadsheets, reports and other valuable data. As with any digital platform, it’s always best to avoid sharing sensitive information when using ChatGPT or any AI chatbot.

In terms of confidentiality, are all ChatGPT versions the same?

Not all ChatGPT versions offer the same level of security. Higher-tier options, like ChatGPT Team and Enterprise, provide enhanced privacy controls.

For example, Free and Plus versions of ChatGPT may use user inputs to improve the model and generate better and more accurate responses. However, Team and Enterprise versions do not store user conversations nor use them for training.

It is always best to avoid sharing sensitive information on any AI chatbot including ChatGPT to reduce the risk of human error, and that information ending up in the hands of cybercriminals.

What should you avoid sharing on ChatGPT?

Despite its many advantages to businesses and individuals, there are serious risks associated with entering confidential information into AI models like ChatGPT.

If you’re wondering how to reduce the risk of a data breach in your organisation, do not share these five critical things with ChatGPT.

1. Personal Identifiable Information (PII)

Cybercriminals are desperate to get their hands on your Personal Identifiable Information (PII). This includes everything from your full name and date of birth to social security numbers and email addresses. However, AI tools like ChatGPT are vulnerable to breaches and are susceptible to data theft, identity theft, financial fraud and more.

Cybercriminals will attempt to use this information to impersonate other people, gain unauthorised access to accounts, or use their identity to launch phishing attacks. Therefore, workers must exercise extreme caution when sharing personal information with any chatbot to safeguard their data and identities and protect IT networks from bad actors.

2. Passwords and login details

Passwords and login credentials work as digital keys that unlock our online identities and personal information. They also give us access to company systems, files, and data. Sharing this type of confidential information on ChatGPT could make your business vulnerable to unauthorised access by malicious third parties. Passwords should always be strong and unique and never shared with anyone including AI models. Also, adopting additional security like multi-factor authentication will tighten data security further and mitigate the risk of a cyberattack.

3. Bank details and financial information

You should never divulge your private banking details to any AI model including ChatGPT. Whether it’s credit card numbers, bank account information, or preferred payment methods, it’s vital to use encrypted and secure channels when discussing financial matters online or completing a transaction. The consequences of a financial data breach are vast, ranging from transactional fraud to emptied bank accounts. Therefore, users should also ensure their financial information is always protected and never shared on ChatGPT, especially at the workplace.

4. Private and confidential information

If confidential information is entered into ChatGPT, it could be exposed through human error or data retention policies, compromising your privacy and your company’s reputation. If this happens in the workplace it could lead to legal problems, breaches of trust, and harm business relationships. In this case, it’s important to limit the information you share with ChatGPT to only what is necessary and keep those secrets to yourself.

5. Intellectual property

From intellectual property and propriety information to copyright materials and patents, sharing creative details and inspiration in the workplace via ChatGPT poses significant risks. For starters, it can lead to unauthorised use, potential theft, and be detrimental to business operations. Users should always refrain from disclosing any type of intellectual property to ChatGPT. Rather it should always be protected and safeguarded to protect ownership rights and preserve its commercial value.

6. Implement Organisational Safeguards and AI Usage Policies

While individual caution is important, businesses must take a proactive approach to AI security by implementing clear policies and safeguards. Relying solely on employees to avoid sharing sensitive data is not enough, organisations should establish strict access controls, formal AI usage guidelines, and continuous monitoring to mitigate risks effectively.

First, businesses should restrict AI usage to specific work tasks, ensuring that employees only interact with AI models like ChatGPT in ways that align with company security policies. This prevents unauthorised or risky use cases, such as employees unknowingly inputting confidential information.

Second, cybersecurity training should be mandatory for all staff using AI tools. Employees need to understand not only what data should never be shared but also the potential risks of AI-generated misinformation or security vulnerabilities.

Finally, businesses should implement data governance policies that allow for oversight of AI-related activities. This includes tracking how AI tools are used within the organisation, ensuring compliance with regulatory standards, and regularly updating internal AI policies as technology evolves. By embedding these safeguards into the workplace, companies can benefit from AI innovation while maintaining strong data security and confidentiality.

What is ChatGPT’s privacy policy?

ChatGPT conversations are saved on OpenAI’s servers in line with its privacy policy to improve its responses and understanding of language. But ChatGPT does not sell or share user data to any third parties without the user’s consent.

ChatGPT employs security measures like encryption and access controls and complies with data protection laws such as GDPR. However, it is not built for securely handling confidential information, so users should exercise caution when sharing sensitive data.

Yet, despite these safety measures, ChatGPT still has data security weaknesses. Like all digital platforms, there’s a risk of unauthorised access, data breaches and theft of sensitive information. So, if your workers are sharing personal details and sensitive information via ChatGPT, they could be exposing your organisation to a cyberattack.

Can businesses leverage AI safely in the workplace?

Yes, many businesses across a vast range of industries already use AI safely in the workplace. With the right safety considerations and policies, AI is a powerful tool for transforming the workplace, enhancing productivity, and reducing human error.

The following recommendations will help your business use AI safely at work:

- Implement formal AI policies: Provide protection for individuals and the company by adopting strict AI policies around its use in the workplace. These policies should also include the organisation’s latest training and guidance rules.

- Only use company-approved AI models: A multitude of AI options are now available, including free, paid and public options. Ensure all employees only use company-approved versions that carry appropriate security and controls in line with wider cybersecurity protocols.

- Use AI with caution: Exercise caution when inputting any data into ChatGPT or other AI chatbots. Again, avoid revealing sensitive information and confidential data about customers or employees to reduce the risk of data theft.

- Always verify AI responses: Like any AI model, ChatGPT is not perfect. It is important to double-check any AI-generated responses before using them in the workplace, especially if they could impact a user’s health, safety or finances. These are known as Your Money, Your Life (YMYL) responses because they could have serious consequences for users.

Looking for an AI partner to protect your critical business data?

Strengthen your organisation’s defences against AI-related security risks. Our AI training empowers employees to use ChatGPT safely without exposing sensitive business data. Contact us today to implement AI security best practices.